Gratis helsekurs på nett

Medkurs.no er en norsk nettside som tilbyr gratis helsekurs på nett med mål om å spre helsekunnskap

Nyeste kurs

Forebygging av rust: Tips for å beskytte rørsystemet ditt

Rust kan føre til korrosjon, lekkasjer og kostbare reparasjoner hvis det ikke kontrolleres. Heldigvis kan du ta flere proaktive grep for å beskytte rørsystemet mot

7 tips for et vellykket taktekking prosjekt

Når du ser for deg hjemmet ditt, hva er den første forsvarslinjen mot det ubarmhjertige dunkende regnet, den brennende varmen fra solen og haglsteinens skurrende

Hjemmets isolasjon: Hvordan forbedre energieffektiviteten og komforten

For mange fremmaner bare omtalen av hjemmeisolasjon bilder av støvete loftrom, ulemper og utgifter på forhånd. Likevel inviterer jeg deg til å kaste disse forutinnstillingene

Koblingen mellom munnhygiene og hjertehelse

Hva tenker du på når du tenker på å opprettholde et sunt hjerte? Du kan tenke deg å løpe på en tredemølle, velge hjertevennlig mat,

Forebygging av hundebitt: øke sikkerheten, redusere risikoen

For å redusere antall skader fra hundebitt, bør voksne og barn læres om bittforebygging, og hundeeiere bør praktisere ansvarlig hundeeierskap. Å forstå hundens kroppsspråk er

Lavt vanntrykk i huset ditt? Her er hva som kan skje

Betydningen av stabilt vanntrykk overgår ren komfort; det snakker om helsen til hjemmets livlinjer. Redusert trykk kan være et tidlig varselsignal om lurende problemer i

Visdom fra låsesmeden: Tips og triks for en smidig prosess ved bytte av dørlås

Utskifting av dørlåser er et viktig skritt for å ivareta sikkerheten og beskyttelsen av eiendommen din. Enten du har opplevd et sikkerhetsbrudd, har flyttet til

Koselige kreasjoner: Utforsk de nyeste trendene innen strikkemote

Når årstidene skifter og temperaturen synker, er det ingenting som slår å pakke seg inn i varmen og komforten fra strikkede plagg. Fra tykke gensere

Tips for oppussing av rørleggerarbeid

Når du går inn i helligdommen til hjemmet ditt, er det siste du ønsker å kjempe med det illevarslende drypp-drypp-drypp fra en falsk kran eller

Typer testamenter: Hvilken er riktig for deg?

Når de utallige kapitlene i livene våre til slutt avsluttes, blir vårt siste testamente – vår vilje den siste fortellingen vi etterlater oss. Det er

Hvordan legge Chevron parkettgulv?

Det intrikate mønsteret som gjør chevronparkett så forlokkende er også det som skremmer de fleste dristige DIY-entusiaster eller til og med erfarne fagfolk. Frykt ikke,

Hva å tenke på gjeldende gjerder

Gjerder er mer enn eiendomsgrenser; de er praktiske elementer som bidrar til sikkerhet, personvern og den generelle estetikken til et hjem. Denne utforskningen dykker ned

Solenergi og miljøet: det gode, dårlige og stygge

Tillokkelsen til solenergi er ubestridelig. Med hver soloppgang får vi muligheten til å utnytte solens energi, redusere vår avhengighet av fossilt brensel og dempe den

Bilklimaanlegg: Hvordan maksimere kulden

Når de første svetteperlene pipler nedover pannen din, kan du ikke unngå å huske den søte gleden ved å gå inn i en skarp, kjølig,

Athleisure Allure: Oppløftende fritidsklær for den moderne kvinnen

Hvorfor er utviklingen av fritidsklær så sentral, og hvorfor skulle det gi gjenklang hos deg, den moderne kvinnen? Til å begynne med symboliserer det brudd

Tips for å benytte et personlig lån til tross for lav kredittscore!

En lav kredittscore bør ikke være en hindring for å få et personlig lån. Til tross for en lav kredittscore, er det tilgjengelige alternativer for

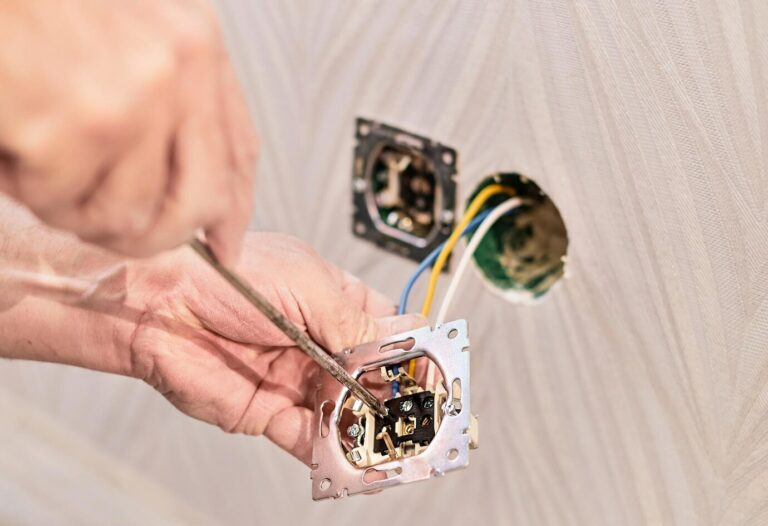

Gjør DIY ledningsprosjekt? Unngå disse farlige feilene

I en tidsalder med instruksjonsvideoer og endeløse online veiledninger, kan spenningen ved å brette opp ermene for å takle et DIY-kabelprosjekt være uimotståelig. Selve tilfredsstillelsen

5 måter å oppmuntre til positiv hundeatferd

Oppfører hunden din seg konstant og driver deg oppover veggen? Skaper kranglene deres strid i husholdningen din? Vel, frykt ikke, for jeg er i ferd

Hva kjendiser bør gjøre og ikke gjøre når det gjelder stil

Har du noen gang bladd gjennom et glanset magasin eller scrollet gjennom sosiale medier og beundret kjendisers fantastiske antrekk? Ja, det har vi alle. Det

Reparasjon av billås: Tips om feilsøking fra profesjonelle autolåsesmeder

Reparasjon av billåser er et vanlig problem som mange bileiere opplever på et eller annet tidspunkt. Når billåsen din ikke fungerer som den skal, kan

Vanlige feilfunksjoner i låsen: Identifisere de skyldige

Låsefeil kan være svært frustrerende og føre til at du låser deg ute av hjemmet, bilen eller kontoret på de mest ubeleilige tidspunkt. Enten det

Avslutt med stil: Avsløring av de hotteste jakketrendene for 2023

Det er alltid spennende å oppdage de nyeste motetrendene, spesielt når det gjelder jakker. Nå som 2023 nærmer seg, venter moteentusiaster og trendsettere i spenning

6 stiltips for søte og komfortable roadtrip-antrekk

Roadtrips kan være veldig gøy, men de kan også være en utfordring å kle seg for. Du vil være komfortabel i de lange timene med

Hvorfor er det så viktig å trene hunden din?

Se for deg en verden hvor hunder og deres eiere kommuniserte uanstrengt, forsto hverandre fullstendig og levde i perfekt harmoni. Det kan virke som en

Stilig genser: Tips til hvordan du kler deg for å imponere

Gensere er en fast bestanddel i enhver motebevisst persons garderobe. De er allsidige, koselige og kan øyeblikkelig løfte ethvert antrekk. Uansett om du kler deg

Derfor er bemanningsbyråer avgjørende for helsesektoren

Den moderne helsesektoren er preget av bruk av vikarer og innleie av arbeidskraft. Dette bildet gjelder selvfølgelig ikke alle områder, og det er store forskjeller

6 alarmerende sykdommer som kjæledyr kan overføre til mennesker

Forestill deg dette: Du ligger sammenkrøpet i sofaen med din kjære pelskledde venn ved din side. Mens du stryker over den myke pelsen, føler du

Isolering av rør: Forebygge frysing før det oppstår

Isolering av rørene er et viktig tiltak for å sikre at rørsystemet ditt holder lenge og er effektivt. Du kan unngå kostbare reparasjoner og potensielle

Elegant og selvsikker: Eksperttips for styling av en svart kjole

Den svarte kjolen har en ubestridelig tiltrekningskraft. Det er et tidløst og allsidig plagg som har vært et fast innslag i enhver kvinnes garderobe i

9 ting du bør tenke på før du oppsøker en hudpleiespesialist

Er du lei av å ha et hat-kjærlighetsforhold til huden din? Virker hudpleieprodukter og -rutiner som en labyrint du ikke finner frem i? I så

Førerkort på telefon – En praktisk løsning

Å ha førerkortet tilgjengelig på telefonen kan være en praktisk og enkel løsning for mange sjåfører. Det kan også bidra til å redusere risikoen for

Kompleks kommunikasjon og alternativ supplerende kommunikasjon kurs: En veiledning for å forbedre kommunikasjonsferdighetene

Innledning: Kompleks kommunikasjon og alternativ supplerende kommunikasjon kurs Kompleks kommunikasjon og alternativ supplerende kommunikasjon (ASK) er viktig for individer med kommunikasjonsvansker og deres støttepersoner. Ved

En grundig veiledning til Anvendt atferdsanalyse (ABA) kurs

fra Medkurs.no Introduksjon til Anvendt atferdsanalyse Anvendt atferdsanalyse (ABA) er en vitenskapelig tilnærming til å studere og forstå atferd, spesielt hos personer med autismespekterforstyrrelser og

Omsorg ved revmatologiske sykdommer kurs

– Et gratis nettbasert helsekurs fra Medkurs.no Revmatologiske sykdommer kan by på både fysiske og psykiske utfordringer, og riktig støtte er avgjørende for pasientenes livskvalitet.

Kliniske ferdigheter og eksamensforberedelseskurs: Tips og innsikt for optimal suksess

– en Medkurs.no-guide Velkommen til en nyttig og informativ guide fra Medkurs.no, hvor vi tilbyr gratis helsekurs på nett. Vennligst bemerk at kursene ikke er

Kurs i klinisk beslutningstaking og diagnostikk: En guide til å forbedre kliniske ferdigheter og pasientresultater

Kurs i klinisk beslutningstaking og diagnostikk – En guide til å forbedre kliniske ferdigheter og pasientresultater, tilbudt av Medkurs.no Introduksjon: Hvorfor kurs i klinisk beslutningstaking

Omsorg ved hjerne- og nervesystemet lidelser kurs: Støtte og veiledning for omsorgspersoner

Innledning: Hva er omsorg ved hjerne- og nervesystemet lidelser kurs? Velkommen til Medkurs.no! Vi er stolte av å tilby gratis helsekurs på nett som kan

Forebygging og behandling av trykksår kurs: Alt du trenger å vite

Forebygging og behandling av trykksår kurs: Lær viktige ferdigheter med gratis helsekurs på nett fra Medkurs.no Innledning: Hva er trykksår og hvorfor er forebygging og

Kurs i styrketrening og helse: Bli en mester i helsefremmende treningsmetoder

– tilbud fra Medkurs.no Fra Medkurs.no – din kilde til gratis helsekurs på nett Velkommen! På Medkurs.no tilbyr vi deg gratis kurs i styrketrening og

Kurs i avansert livsstøtte (ALS): Lær deg ferdighetene for å redde liv

Kurs i avansert livsstøtte (ALS) på Medkurs.no: Lær deg ferdighetene for å redde liv I dagens travle og hektiske samfunn er kunnskap om livreddende ferdigheter

Kurs i seksuell helse hos eldre: En veiledning for optimal seksuell trivsel og velvære

Kurs i seksuell helse hos eldre: En veiledning for optimal seksuell trivsel og velvære av Medkurs.no Hos Medkurs.no forstår vi at seksuell helse er en

Kurs i traumefokusert omsorg – En veiviser for engasjerte omsorgspersoner

Velkommen til Kurs i traumefokusert omsorg fra Medkurs.no – En veiviser for engasjerte omsorgspersoner I en verden der traumatiske opplevelser dessverre er en del av

Ernæringskompetanse og dietetikk kurs: Veien til et sunnere liv

fra Medkurs.no Ernæring og kosthold spiller en viktig rolle i våre liv og vår helse. Medkurs.no tilbyr gratis helsekurs på nett, inkludert Ernæringskompetanse og dietetikk

Kurs i helsearbeid i asylmottak og flyktningbo

: En oversikt fra Medkurs.no Med behovet for mer kunnskap og forståelse rundt helsearbeid i asylmottak og flyktningbo, har Medkurs.no utviklet et gratis onlinekurs som

Kommunikasjon med hørselshemmede i helsetjenesten kurs: Veien til en mer inkluderende og tilgjengelig helsetjeneste

Kommunikasjon med hørselshemmede i helsetjenesten kurs fra Medkurs.no: Lær å skape en mer inkluderende helsetjeneste Medkurs.no tilbyr et gratis helsekurs på nett som fokuserer på

Mestring av kroniske lungesykdommer kurs: En veiledning for bedre helse og livskvalitet

Mestring av kroniske lungesykdommer kurs: En veiledning fra Medkurs.no for bedre helse og livskvalitet – helt gratis! Medkurs.no har som mål å hjelpe deg med

Kurs i fruktbarhet og assistert befruktning: En veiledning for å hjelpe deg på reisen mot foreldreskap

Kurs i fruktbarhet og assistert befruktning: En hjelpende hånd på veien til foreldreskap I en verden der stadig flere mennesker opplever utfordringer knyttet til fruktbarhet,

Kurs i helserettighetene til personer med funksjonsnedsettelser

Innledning: Hvorfor ? Helserettigheter for personer med funksjonsnedsettelser er et viktig tema som bør være kjent for alle som jobber innen helse- og omsorgssektoren, samt

Omsorg ved Parkinsons sykdom kurs: En guide til grundig omsorg og støtte

Omsorg ved Parkinsons sykdom kurs: En hjelpende guide Parkinsons sykdom er en nevrologisk lidelse som påvirker motoriske ferdigheter og kognitiv funksjon hos rammede individer. Omsorg

Kurs i bevegelsesapparatets helse: Lær å ta vare på muskler og ledd

Kurs i bevegelsesapparatets helse: Informasjon og muligheter Hva innebærer Kurs i bevegelsesapparatets helse Å ta vare på bevegelsesapparatets helse er viktig for oss alle –

Kurs i helse og innvandrere: Gratis helsekurs på nett fra Medkurs.no

Innledning: Hvorfor kurs i helse og innvandrere er viktig for god helsekompetanse I en stadig mer globalisert verden, er innvandring en viktig faktor i vår

Kurs i brukermedvirkning og empowerment i helsetjenesten: En guide til økt pasientinvolvering og bedre helseresultater

Kurs i brukermedvirkning og empowerment i helsetjenesten: En guide til økt pasientinvolvering og bedre helseresultater fra Medkurs.no Introduksjon: Hvorfor er brukermedvirkning og empowerment viktig i

Vold i nære relasjoner – håndtering og støtte kurs

: En livlig guide fra Medkurs.no Vold i nære relasjoner er et alvorlig og utbredt samfunnsproblem som påvirker mange mennesker, både direkte og indirekte. Som

Kurs i kunst og kultur i helsetjenesten: En vei til forbedret helse og trivsel

Velkommen til Medkurs.no – et nettsted som tilbyr gratis helsekurs på nett. I denne artikkelen skal vi se nærmere på temaet Kurs i kunst og

Omsorg ved kritisk syke kurs: Lær å støtte og pleie dine kjære i deres vanskeligste tider

Omsorg ved kritisk syke kurs: Støtte og pleie for dine kjære i deres vanskeligste tider Når noen vi er glad i blir kritisk syk, kan

Kurs i behandling av autoimmune sykdommer: Lær hvordan du styrker immunforsvaret og forbedrer livskvaliteten

Kurs i behandling av autoimmune sykdommer fra Medkurs.no: Lær hvordan du styrker immunforsvaret og forbedrer livskvaliteten helt gratis Hos Medkurs.no er vi stolte av å

Praktisk sykepleie i hjemmet kurs: Lær å gi omsorg til dine kjære

Praktisk sykepleie i hjemmet kurs fra Medkurs.no: Lær å gi omsorg til dine kjære med gratis helsekurs på nett Å ta vare på en syk,

Omsorg ved insomni og søvnlidelser kurs: En omfattende veiledning

Omsorg ved insomni og søvnlidelser kurs: Et gratis nettbasert helsekurs fra Medkurs.no Søvn er en av de viktigste faktorene for god helse og velvære. Imidlertid

Kurs i helsefremmende arbeidsplasser: Maksimer effektiviteten og trivselen på jobben

Innledning: Gratis kurs i helsefremmende arbeidsplasser fra Medkurs.no I en verden der stress og arbeidspress er en stadig økende utfordring, er det viktigere enn noen

Kurs i sammensatte og sjeldne diagnoser: En veiviser for fagpersoner og pårørende

I denne artikkelen vil vi gi en oversikt over kursene i sammensatte og sjeldne diagnoser som Medkurs.no tilbyr. Medkurs.no er kjent for å tilby gratis

Bli kjent med Helse og livsstil endring kurs: Veien til et sunnere, lykkeligere liv

fra Medkurs.no Helse og livsstil spiller en avgjørende rolle i vår generelle trivsel, og å gjøre positive endringer i disse områdene kan forvandle livet ditt.

Stoffskifteproblemer og omsorgsbehov kurs: En veiledning for helsepersonell og pårørende

Stoffskifteproblemer og omsorgsbehov kurs: En uunnværlig ressurs for både helsepersonell og pårørende Introduksjon Stoffskifteproblemer påvirker tusenvis av mennesker over hele landet, og deres omsorgsbehov kan

Kurs i pre- og postoperativ omsorg: Alt du trenger å vite

Kurs i pre- og postoperativ omsorg: Det ultimate gratis nettkurset på Medkurs.no Å jobbe med pasienter før og etter operasjoner kan være utfordrende. Det er

Naturlig behandling og helhetlig helse kurs: Bli en ekspert på alternativ helse

Medkurs.no: Finn det rette Naturbehandling og helhetlig helsekurs for deg – gratis og uforpliktende! I en tid der helse og velvære blir stadig viktigere for

Spiritualitet og omsorg i helsevesenet kurs: En veiledning for helsepersonell

Introduksjon: Hvordan Spiritualitet og omsorg i helsevesenet kurs kan styrke din praksis Som en del av vårt ønske om å formidle god kunnskap innen helse

Hjertesykdom og hjertesvikt omsorg kurs: Alt du trenger å vite

fra Medkurs.no Hjertesykdom og hjertesvikt er alvorlige helseproblemer som rammer mange mennesker over hele verden. Å lære mer om disse tilstandene og hvordan man best

Stemmehygiene og kommunikasjon kurs: Økonomiser din stemme og kommuniser effektivt

Stemmehygiene og kommunikasjon kurs: En guide til gratis online helsekurs på Medkurs.no Hos Medkurs.no tilbyr vi et bredt spekter av gratis helsekurs på nett, blant

Kurs i prevensjonsrådgivning og seksuell helse: En veiledning for å utvikle dine ferdigheter og styrke samfunnet

Hos Medkurs.no tilbyr vi gratis helsekurs på nett, designet for å gi deg økt kompetanse og forståelse innenfor ulike helseområder. En av våre mest etterspurte

Syn- og hørselsomsorg for eldre kurs: Forstå og hjelpe eldre med sensoriske utfordringer

Introduksjon: Hvorfor er Syn- og hørselsomsorg for eldre kurs viktig? Når alderen kommer, er det naturlig å oppleve noen endringer i synet og hørselen. På

Kurs i helseøkonomi og ressursforvaltning: En veiledning til utdanning og karriereutvikling

Innledning: Hvorfor velge kurs i helseøkonomi og ressursforvaltning? Helseøkonomi og ressursforvaltning er viktige faktorer som påvirker helsevesenet. Det å ha kompetanse innen disse feltene, kan

Terminologi og medisinsk fagspråk kurs: Forstå og navigere i helsevesenet med riktig språkbruk

fra Medkurs.no Introduksjon: Gratis online kurs fra Medkurs.no Velkommen til Medkurs.no – din plattform for gratis helsekurs på nett. Vi tilbyr en rekke kurs innen

Integrering av funksjonshemmede i samfunnet: Effektive kurs og strategier for et inkluderende samfunn

Integrering av funksjonshemmede i samfunnet: Effektive kurs fra Medkurs.no Medkurs.no tilbyr gratis helsekurs på nett, inkludert kurs om integrering av funksjonshemmede i samfunnet. Selv om

Tannhelse for personer med spesielle behov kurs: Alt du trenger å vite for å forbedre munnhelsen til personer med spesielle behov

Introduksjon til Tannhelse for personer med spesielle behov kurs God tannhelse er viktig for alle, og hos Medkurs.no er vi dedikert til å tilby gratis

Motorisk utvikling og behandling: Kurs for bedre forståelse og praksis

Motorisk utvikling og behandling kurs: Gratis helsekurs på nett fra Medkurs.no Motorisk utvikling er en viktig del av barns vekst og trivsel, og det er

Kurs i habilitering og rehabilitering av barn

– Tilbud fra Medkurs.no Vi i Medkurs.no er stolte av å kunne tilby gratis helsekurs på nett, og i dag vil vi introdusere vårt kurs

Hodetelefoner og øre-problematikk forebygging kurs: Ta vare på hørselen din

– Gratis nettbasert kurs fra Medkurs.no (ikke godkjent for offentlig bruk) I en verden hvor teknologi og bekvemmelighet dominerer, har hodetelefoner blitt en uunnværlig del

Omsorg ved multippel sklerose (MS) kurs: En steg-for-steg guide for å støtte og hjelpe dine kjære

– tilbudt av Medkurs.no I dagens verden er det en voksende interesse for å lære mer om hvordan man best kan ta vare på sine

Kurs i kognitive funksjonsnedsettelser og støtte: Lær å forstå og hjelpe personer med kognitive utfordringer

Kurs i kognitive funksjonsnedsettelser og støtte: Gratis online helsekurs fra Medkurs.no Hos Medkurs.no ønsker vi å bidra til økt kunnskap og forståelse rundt helseutfordringer og

Helsepsykologi og atferdsendring kurs: Maximer ditt potensial for en sunnere livsstil

Helsepsykologi og atferdsendring kurs: Maksimer ditt potensial for en sunnere livsstil fra Medkurs.no Introduksjon til gratis nettbasert helsepsykologi kurs fra Medkurs.no Velkommen til Helsepsykologi og

Inkludering og mangfold i helsetjenesten kurs: Lær å skape en mer inkluderende og mangfoldig helsetjeneste

med Medkurs.no I dagens samfunn er det viktigere enn noen gang å sikre at helsetjenesten er inkluderende og mangfoldig. Inkludering og mangfold i helsetjenesten kurs

Tverrfaglig rehabilitering kurs: En grundig veiledning for helsepersonell

Tverrfaglig rehabilitering kurs: En veiledning og introduksjon fra Medkurs.no Velkommen til Medkurs.no, en nettside som tilbyr gratis helsekurs på nett! I denne artikkelen, vil vi

Kurs i mindfulness og selvomsorg i helsetjenesten: En guide for helsepersonell og ledere

Kurs i mindfulness og selvomsorg i helsetjenesten: En veiledning om gratis helsekurs på nett fra Medkurs.no I en stresset og travel hverdag er det spesielt

Søvn og søvnforstyrrelser kurs: Lær hvordan du kan forbedre din søvnkvalitet og ta kontroll over din natts søvn

– Gratis kurs fra Medkurs.no Hva er viktigere for vår helse og velvære enn en god natts søvn? Søvn har stor betydning for både vår

Kurs i arbeid og helse: Styrk din kompetanse og fremme et godt arbeidsmiljø

med Medkurs.no I dagens arbeidsliv er det viktigere enn noensinne å sette fokus på arbeid og helse. Medkurs.no tilbyr gratis helsekurs på nett, med mål

Mobbing og trakassering i helsevesenet kurs: Lær å forebygge, håndtere og rapportere upassende oppførsel

fra Medkurs.no Hos Medkurs.no tilbyr vi gratis helsekurs på nett, spesielt utviklet for å gi deg kunnskap om viktige temaer innen helsevesenet. I denne artikkelen

Helsearbeid i skoler og barnehager kurs: En guide for utdanning og praksis

Helsearbeid i skoler og barnehager kurs: Gratis online opplæring fra Medkurs.no Vi tar barnas helse og trivsel på alvor, og det er derfor vi i

Kurs i barne- og ungdomspsykiatri: Lær om psykisk helse hos barn og ungdommer

Kurs i barne- og ungdomspsykiatri: Gratis og nettbasert opplæring for alle interesserte Hos Medkurs.no forstår vi at psykisk helse hos barn og unge er et

Kurs i funksjonell medisin og integrert helse: Utforsk helhetlig og personlig tilnærming for helse og velvære

Velkommen til Medkurs.no, din kilde til gratis helsekurs på nett! Vi er her for å hjelpe deg med å utvide din kunnskap og ferdigheter innen

Praktisk sykepleie og hjelpemidler kurs

: En informativ guide om gratis helsekurs på Medkurs.no : En introduksjon I denne artikkelen vil vi fokusere på et spennende kurs: . Dette kurset

Helserelaterte rettigheter og plikter kurs: Forstå ditt ansvar og beskytte dine rettigheter

Helserelaterte rettigheter og plikter kurs: Lær om dine rettigheter og ansvar gratis på Medkurs.no I dagens helsevesen er det viktigere enn noen gang å forstå

Dokumentasjon og journalføring i helsetjenesten kurs: En veiledning for helsepersonell

Dokumentasjon og journalføring i helsetjenesten kurs: En veiledning for helsepersonell fra Medkurs.no I en stadig mer kompleks helsetjeneste er det avgjørende for helsepersonell å ha

Yrkesetikk og relasjonell omsorg kurs: En guide for bedre praksis

fra Medkurs.no I dagens helsevesen er det viktigere enn noen gang å være oppdatert og utdannet i yrkesetikk og relasjonell omsorg. Hos Medkurs.no tilbyr vi

Kurs i barn med spesielle behov: En veiledning for å støtte barns vekst og utvikling

Velkommen til Medkurs.no, hvor vi tilbyr gratis helsekurs på nett, spesielt designet for å informere og styrke alle som jobber med eller tar vare på

Arbeidsmedisin og Bedriftshelsetjeneste Kurs: Din guide til en sunn og sikker arbeidsplass

Arbeidsmedisin og bedriftshelsetjeneste kurs: En guide til gratis nettbaserte kurs fra Medkurs.no Innledning til arbeidsmedisin og bedriftshelsetjeneste kurs Arbeidsmedisin og bedriftshelsetjeneste kurs er avgjørende for

Kultur og samarbeid i helsetjenesten kurs – Øke effektiviteten og kvaliteten på pasientomsorgen gjennom samarbeidskompetanse

Kultur og samarbeid i helsetjenesten kurs: Lær å fremme en positiv arbeidskultur og forbedre samarbeidet i helsetjenesten Medkurs.no tilbyr en rekke gratis helsekurs på nett,

Omsorg ved alvorlige psykiske lidelser kurs: En veiledning for å forstå og støtte pasienter og deres familier

Omsorg ved alvorlige psykiske lidelser kurs – Hjelp til å forstå og støtte pasienter og deres familier I en verden der alvorlige psykiske lidelser blir

Feil og læring i helsetjenesten kurs: Lær gratis hos Medkurs.no og forbedre pasientsikkerheten

Hva er Feil og læring i helsetjenesten kurs? Feil og læring i helsetjenesten kurs er et program som fokuserer på å lære helsepersonell hvordan de

Intensivmedisin og akuttmedisin kurs: Forbered deg på akuttsituasjoner og kritisk syke pasienter

Introduksjon til Intensivmedisin og akuttmedisin kurs på Medkurs.no Intensivmedisin og akuttmedisin, to kritiske områder innen helsefeltet som berører pasienter som er i livstruende situasjoner og

Pasientopplæring og selvledelse: Lær mer om gratis helsekurs og selvhjelp på nett

Introduksjon: Hva er pasientopplæring og selvledelse? Pasientopplæring og selvledelse er en viktig del av helsetjenester og personlig helse og velvære. Dette innebærer at pasienten tilegner

Sårstell og sårbehandling kurs: En grundig veiledning for helsepersonell

fra Medkurs.no Introduksjon: Betydningen av Sårstell og sårbehandling kurs Riktig sårstell og sårbehandling er avgjørende for pasientenes helse og velvære. Hos Medkurs.no tilbyr vi gratis

Friskliv og folkehelse kurs: En vei til et sunnere samfunn

– Fra Medkurs.no I dagens travle hverdag kan det være utfordrende å finne tid og motivasjon til å ta vare på egen helse og velvære.

Sykefraværshåndtering og inkluderende arbeidsliv kurs: en guide for bedre arbeidsmiljø og produktivitet

Fra Medkurs.no – gratis helsekurs på nett Innledning I denne artikkelen vil vi se nærmere på temaet Sykefraværshåndtering og inkluderende arbeidsliv kurs. Hos Medkurs.no tilbyr

Kurs i håndtering av spiseforstyrrelser: En veiledning for effektiv hjelp og støtte

fra Medkurs.no Innledning: Hvorfor kurs i håndtering av spiseforstyrrelser er så viktig Spiseforstyrrelser er en alvorlig og økende bekymring i dagens samfunn. De kan ramme

Etikk og moral i helsetjenesten kurs: Styrk dine ferdigheter og fremme god praksis i helsevesenet

– Medkurs.no I dagens stadig skiftende helsetjenestelandskap er det viktigere enn noen gang for helsepersonell å forstå og håndtere etiske dilemmaer på en ansvarlig og

Tilnærmingen til terapeutisk samtale og empati kurs: Styrke kommunikasjon og forståelse

Terapeutisk samtale og empati kurs: Styrke kommunikasjon og forståelse – Tilbys gratis hos Medkurs.no Helsepersonell er de som står i første rekke når det kommer

Akuttmedisin og førstehjelp på idrettsarenaen: En praktisk guide for trygg og effektiv respons på skader og nødsituasjoner

Akuttmedisin og førstehjelp på idrettsarenaen: En dybdeguide for trygg og effektiv respons på skader og nødsituasjoner Innledning Velkommen til Medkurs.no, hvor vi tilbyr gratis helsekurs

Helsefremmende arbeid og forebygging kurs: En veiledning for å forbedre helse og velvære

Helsefremmende arbeid og forebygging kurs: Øk kunnskapen og forståelsen av helse og trivsel med Medkurs.no I en tid hvor vi blir stadig mer oppmerksomme på

Kurs i håndtering av angst og depresjon: En veiledning til bedre mental helse fra Medkurs.no

Innledning: Kurs i håndtering av angst og depresjon I dagens samfunn er angst og depresjon to av de mest utbredte psykiske lidelsene mennesker strever med.

Prosjektledelse i helsetjenesten kurs

: Lær hvordan du kan lede og styre effektive helsetjenesteprosjekter Innledning: og Medkurs.no Velkommen til Medkurs.no, hvor vi tilbyr gratis helsekurs på nett. I denne

Foreldreveiledning og støtte kurs: En veiviser for å utvikle effektive ferdigheter og styrke familiebånd

Hos Medkurs.no tilbyr vi gratis helsekurs på nett, inkludert kurs for foreldreveiledning og støtte. Vi er stolte over å kunne tilby en rekke ressurser og

Ernæring og spisevansker i eldreomsorg kurs: En informasjonsguide om utfordringer og løsninger

Ernæring og spisevansker i eldreomsorg kurs: En gratis informasjonsguide fra Medkurs.no I dagens samfunn får stadig flere eldre muligheten til å nyte et langt og

Tilrettelegging mot ergonomi og forebygging av belastningsskader: Et kurs for bedre arbeidsmiljø

Ergonomi og belastningsskader kurs fra Medkurs.no: Bidra til et bedre arbeidsmiljø for alle Har du noen gang tenkt på hvor viktig ergonomi er for deg

Kvalitetsarbeid og pasientsikkerhet kurs – En dybdegående veiledning for helsepersonell

Medkurs.no presenterer: Introduksjon til Kvalitetsarbeid og pasientsikkerhet kurs I en verden hvor kunnskap om og fokus på pasientsikkerhet stadig øker, er det viktig for helsepersonell

ADL-trening og aktivitetsveiledning kurs: Hjelp og veiledning for en aktiv og selvhjulpen fremtid

fra Medkurs.no Hos Medkurs.no tilbyr vi gratis helsekurs på nett, og i denne artikkelen vil vi gi en grundig innføring i ADL-trening og aktivitetsveiledning kurs.

Fysioterapi og rehabilitering kurs: Fremme helse og velvære gjennom bevegelse og behandling

Fysioterapi og rehabilitering kurs: Lær gratis på nett med Medkurs.no Fysioterapi og rehabilitering er viktige elementer i helsevesenet som hjelper personer med ulike typer lidelser

Sykehjem og hjemmesykepleie kurs: En guide til å forbedre omsorg og kompetanse

Fra Medkurs.no Innledning: Lær om sykehjem og hjemmesykepleie kursenes rolle i helseomsorgen Sykehjem og hjemmesykepleie spiller en sentral rolle i helseomsorgen og sikrer at pasienter

Hjelpemiddelbruk og tilpasning kurs: Din guide til å maksimere effektiviteten av hjelpemidler i hverdagen

Hjelpemiddelbruk og tilpasning kurs fra Medkurs.no: Din gratis guide til å maksimere effektiviteten av hjelpemidler i hverdagen Velkommen til Medkurs.no! Vi er glade for å

Tverrprofesjonell samarbeid kurs: En guide til effektivt teamwork mellom faggrupper

Tverrprofesjonell samarbeid kurs: Lær effektivt teamwork mellom faggrupper med Medkurs.no! Det kan være utfordrende å jobbe sammen med mennesker fra ulike fagbakgrunner. Å forstå hverandre

Utviklingshemming og habilitering kurs: En veiledning for å styrke ferdighetene og støtte deltakere

Utviklingshemming og habilitering kurs: En veiledning fra Medkurs.no for å styrke ferdighetene og støtte deltakere Innledning: Gratis helsekurs fra Medkurs.no Medkurs.no tilbyr et bredt spekter

Sorg og krisearbeid kurs: Veien til støtte og forståelse

Introduksjon: Utforsk gratis kurs i sorg og krisearbeid hos Medkurs.no Sorg og krisearbeid kan være veldig utfordrende, både for de som er direkte berørt og

Ernæring ved Kroniske Sykdommer Kurs: Et Veikart for Bedre Helse.

Ernæring ved Kroniske Sykdommer Kurs: Et Veikart for Bedre Helse – Gratis Helsekurs på Nett fra Medkurs.no I dagens samfunn, hvor kroniske sykdommer blir stadig

Funksjonshemming og tilretteleggelse kurs: En veiledning for å forstå og støtte inkludering

Funksjonshemming og tilretteleggelse kurs: En veiledning for å forstå og støtte inkludering I en verden med stadig økende forståelse for mangfold og inkludering, er det

Kurs i fødselsomsorg og barselomsorg: Alt du trenger å vite

– Medkurs.no Innledning: Hvorfor velge kurs i fødselsomsorg og barselomsorg? Hos Medkurs.no er vi dedikerte til å tilby gratis helsekurs på nett for å hjelpe

Riktig legemiddelbruk for eldre pasienter kurs

: Økt kompetanse for helsepersonell Når det kommer til legemiddelbruk hos eldre pasienter, er det viktig å ha riktig kunnskap og ferdigheter for å sikre

Skadereduksjon og helsesprøyten kurs – En veiledning for en sikrere injeksjonspraksis

Skadereduksjon og helsesprøyten kurs – En veiledning for en sikrere injeksjonspraksis fra Medkurs.no I denne artikkelen vil vi utforske konseptet skadereduksjon og helsesprøyten kurs, og

Omsorg ved psykiske lidelser kurs: Forståelse, støtte og effektive verktøy for å hjelpe

Omsorg ved psykiske lidelser kurs fra Medkurs.no: Hjelp og støtte når det trengs mest Innledning: Hvorfor er Omsorg ved psykiske lidelser kurs viktige? I et

Kurs i forebygging og behandling av selvmordsatferd: En veiledning for å styrke ferdigheter og redde liv

Kurs i forebygging og behandling av selvmordsatferd: Hvordan styrke ferdigheter og redde liv Selvmordsatferd er en stor global utfordring, og det er viktig å kunne

Infeksjonskontroll og smittevern kurs: Beskytt deg selv og andre mot skadelige mikrober

Medkurs.no: Gratis Infeksjonskontroll og smittevern kurs på nett I dagens verden står vi overfor en økende trussel fra smittsomme sykdommer og resistente mikrober. Det er

Arbeidsrett i helsetjenesten

Arbeidsrett og personalledelse i helsetjenesten kurs – En veiledning for effektiv og lovlig ledelse fra Medkurs.no Velkommen til Medkurs.no, der vi tilbyr gratis helsekurs på

Smertebehandling for barn og unge kurs: En veiledning for helsepersonell og omsorgspersoner

Smertebehandling for barn og unge kurs: En veiledning fra Medkurs.no Innledning: Gratis helsekurs på nett for smertebehandling hos barn og unge Velkommen til Medkurs.no, hvor

Sykdoms- og skadeforebygging kurs: En veiledning til et sunnere og tryggere liv

Velkommen til Medkurs.no, hvor du finner gratis helsekurs på nett for et sunnere og tryggere liv. Vi tilbyr informasjon om ulike sykdoms- og skadeforebygging kurs,

Helsesektorens rolle i klima- og miljøspørsmål kurs

: En innføring av Medkurs.no Miljøproblemer og klimaendringer har blitt en viktig bekymring i det globale samfunnet og har direkte påvirkning på menneskers helse og

Eldreomsorg og gerontologi kurs: En guide for å bli en bedre omsorgsperson

Eldreomsorg og gerontologi kurs: En hjelpende hånd for omsorgspersoner og fagfolk fra Medkurs.no I dette innlegget skal vi snakke om et svært viktig tema, spesielt

Kronisk sykdomsomsorg og rehabilitering kurs: En guide til bedre livskvalitet med gratis helsekurs på nett fra Medkurs.no

Innledning: Møt utfordringene ved kronisk sykdom med effektive omsorgs- og rehabiliteringskurs Kroniske sykdommer, som diabetes, hjerte- og karsykdommer og revmatiske lidelser, påvirker en betydelig del

Mental helse og stressmestring kurs: En veiledning for å forbedre livskvaliteten

med Medkurs.no Innledning: Velkommen til Mental helse og stressmestring kurs hos Medkurs.no Mental helse og stressmestring er viktige aspekter ved livet vårt. Ved Medkurs.no tilbyr

Rusbehandling og avhengighetsomsorg kurs: En veiledning til å forstå og jobbe med avhengighet

Introduksjon: Medkurs.no og rusbehandling og avhengighetsomsorg Medkurs.no tilbyr gratis helsekurs på nett, inkludert kurs om rusbehandling og avhengighetsomsorg. Våre kurs er ikke godkjente for offentlig

Arbeidsmiljø og sikkerhet i helsetjenesten kurs: En veiledning for god praksis

Arbeidsmiljø og sikkerhet i helsetjenesten kurs: Sikre arbeidsplassen med Medkurs.no Arbeidsmiljø og sikkerhet er avgjørende for å sikre trivsel, produktivitet og trygghet for både ansatte

Kulturkompetanse og kommunikasjon i helsesektoren

Kurs i : Forstå flerkulturelle samfunn og styrke kommunikasjonen i helsevesenet Velkommen til Medkurs.no, hvor vi tilbyr gratis helsekurs på nett! Vi fokuserer på å

Traumebehandling og krisepsykiatri kurs: Forståelse, effektiv behandling og hjelp

Velkommen til Medkurs.no. Vi tilbyr en rekke gratis nettbaserte helsekurs, inkludert vårt populære kurs innen traumebehandling og krisepsykiatri. Vi ønsker å presisere at våre kurs

Lærings- og mestringstilbud kurs: En veiledning for å forstå og velge det riktige kurset for deg

fra Medkurs.no Det er mange gode grunner til å delta i et lærings- og mestringstilbud kurs, uavhengig av om det handler om personlig vekst, utvikling

Mestring av ensomhet og isolasjon kurs: En veiledning for bedre mental helse og sosial tilknytning

Mestring av ensomhet og isolasjon kurs – Gratis helsekurs på nett fra Medkurs.no Det er mange som kjenner på følelsen av ensomhet og isolasjon i

Kurs i smittevern og antibiotikaresistens: Forståelse og håndtering av infeksjoner og resistens

Her hos Medkurs.no er vi stolte av å tilby gratis helsekurs på nett for de som ønsker å lære mer om viktige medisinske temaer. I

Seksuell helse og prevensjonsveiledning kurs: En nødvendighet for et sunnere og tryggere samfunn

Seksuell helse og prevensjonsveiledning kurs: Optimaliser din kunnskap med Medkurs.no Seksuell helse er grunnleggende for en persons generelle velvære og livskvalitet, og for å opprettholde

Digital kompetanse i helsetjenesten kurs: En veiledning for å forbedre helsetjenester i den digitale tidsalderen

Digital kompetanse i helsetjenesten kurs: Forbedre helsetjenester i den digitale tidsalderen – Medkurs.no I en verden som stadig blir mer digitalisert, er det viktig at

Tannhelse og munnpleie kurs: En komplett guide for å forbedre din munnhygiene

Tannhelse er av avgjørende betydning for vår generelle helse og velvære. Ved å opprettholde god munnpleie kan vi ikke bare forhindre tannproblemer, men også redusere

Akuttmedisin og gjenopplivning av barn: En veiledning for å redde liv

Nødsituasjoner og akuttmedisin for barn Barn kan, som voksne, rammes av akutte medisinske situasjoner. Det kan være alt fra kvelning og drukning til hjertestans og

Omsorg ved livets slutt kurs: Utforsk gratis helsekurs på nett fra Medkurs.no

Hva inneholder et gratis nettbasert Omsorg ved livets slutt kurs fra Medkurs.no? BILDE Målgruppene for Medkurs.no sine gratis helsekurs på nett Våre kurs er tilgjengelige

Forebygging og behandling av kroniske sår kurs: Et omfattende hjelpemiddel for helsepersonell

Forebygging og behandling av kroniske sår kurs – En gratis ressurs for helsepersonell fra Medkurs.no Kroniske sår er et vanlig problem for mange mennesker rundt

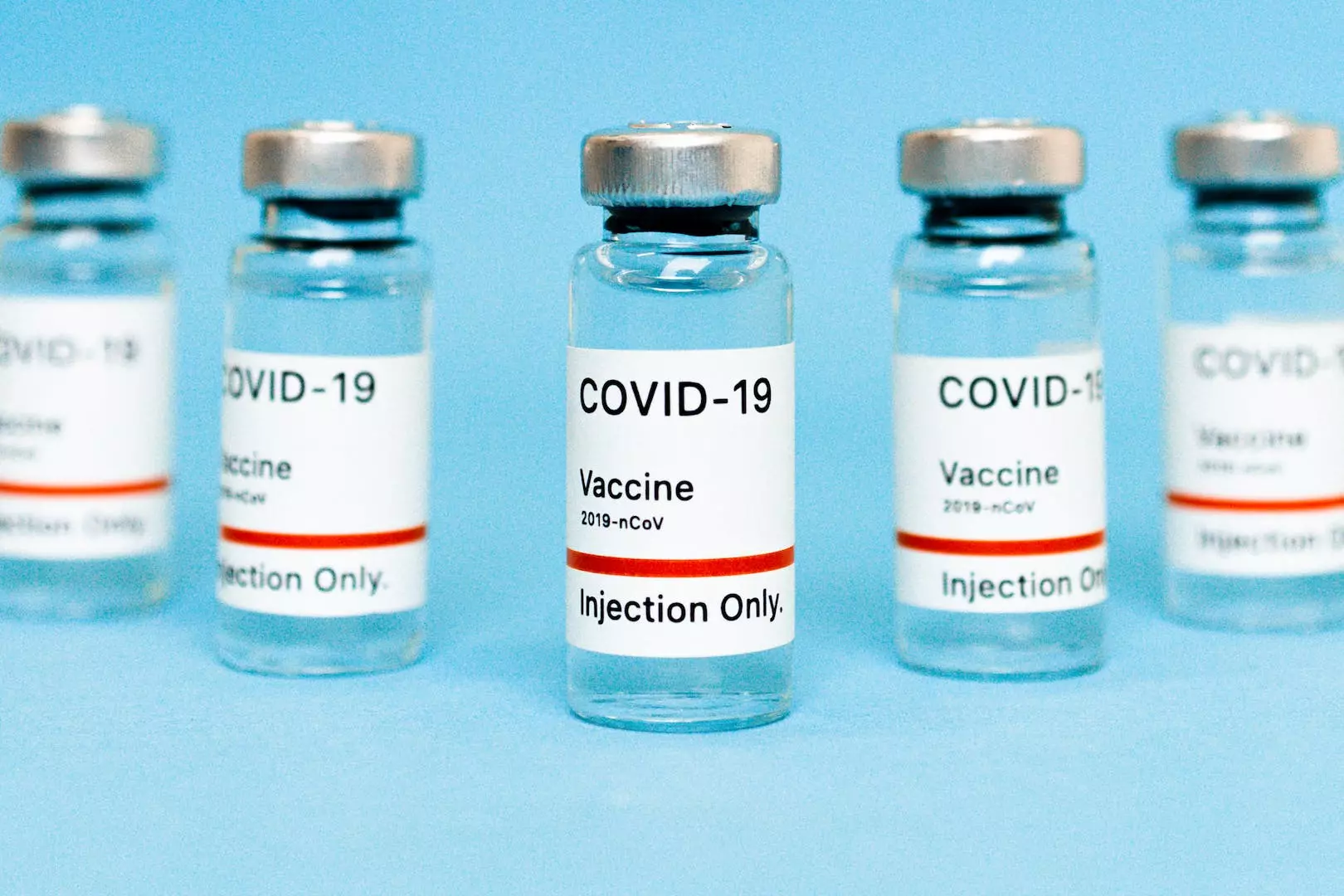

Vaksiner og vaksinasjon kurs: En grundig guide til å forstå og mestre vaksinasjonsprinsipper og praksis

Vaksiner og vaksinasjon kurs: En grundig veiledning fra Medkurs.no Hos Medkurs.no er vi opptatt av å tilby informasjon og opplæring av høy kvalitet for helsepersonell,

Kurs i helsepedagogikk og veiledning: Gratis nettbaserte kurs for bedre kommunikasjon og omsorg

Hva er helsepedagogikk og veiledning? Helsepedagogikk handler om å formidle helse- og omsorgsinformasjon på en pedagogisk og tilrettelagt måte, slik at pasienter og pårørende kan

Kommunikasjon med pasienter og pårørende: En guide for helsepersonell

Kommunikasjon med pasienter og pårørende: Et gratis helsekurs fra Medkurs.no Helsepersonell over hele landet står overfor den utfordrende og viktige oppgaven med å kommunisere effektivt

En grundig guide til psykiatrisk sykepleie kurs

: Utforsk din mulighet til å lære med Medkurs.no Introduksjon til psykiatrisk sykepleie kurs for helsepersonell Psykiatrisk sykepleie er en viktig del av dagens helsevesen.

Kreftomsorg og kreftbehandling kurs: En guide til profesjonell og effektiv støtte

Kreftomsorg og kreftbehandling kurs: Forbedre din kompetanse med Medkurs.no Bli kjent med Kreftomsorg og kreftbehandling kurs fra Medkurs.no Medkurs.no tilbyr et bredt spekter av gratis

Kurs i helseledelse og -administrasjon: En guide til karriereutvikling innen helsevesenet

fra Medkurs.no I en verden med stadig økende krav til helsevesenet, er det et økende behov for kvalifiserte helseledere og -administratorer som kan håndtere disse

Svangerskapsomsorg kurs: Hva du trenger å vite

Innledning – Velkommen til Medkurs.no Her hos Medkurs.no tilbyr vi gratis helsekurs på nett innen en rekke temaer, inkludert et svangerskapsomsorg kurs designet for vordende

Fysisk aktivitet og helse kurs: Guide til et sunnere og mer aktivt liv

Fysisk aktivitet og helse kurs: En guide til et sunnere og mer aktivt liv med Medkurs.no I dagens moderne verden hvor mange av oss tilbringer

Pediatrisk sykepleie kurs – En guide til å velge det beste kurset for din karriere

Pediatrisk sykepleie kurs – En veiledning til gratis nettbaserte kurs fra MedKurs.no Hvorfor ta et pediatrisk sykepleie kurs? Pediatrisk sykepleie kurs, også kjent som barnepleie

Kurs i barns utvikling og omsorgsbehov: En veiledning for å forstå og støtte barns vekst og trivsel

Kurs i barns utvikling og omsorgsbehov: Ta del i Medkurs.nos gratis veiledning på nett Hos Medkurs.no streber vi etter å tilby aktuell og praktisk informasjon

Kurs i psykisk førstehjelp: Lær å støtte og hjelpe andre i psykiske nødsituasjoner på Medkurs.no

Innledning: Hva er et kurs i psykisk førstehjelp, og hvorfor er det viktig? Psykisk førstehjelp handler om å kunne hjelpe og støtte en person som

En innføring i geriatrisk sykepleie kurs

Hva skal man vite om Geriatrisk sykepleie kurs? Geriatrisk sykepleie kurs er svært etterspurte i dagens samfunn, på grunn av den økende andelen eldre i

Kognitiv Adferdsterapi Kurs: Lær Effektive Teknikker for å Forbedre Din Mentale Helse og Livskvalitet

fra Medkurs.no Hos Medkurs.no tilbyr vi gratis helsekurs på nett for å hjelpe deg med å forbedre din mentale helse og livskvalitet. Våre online kurs,

Voldsforebygging og konflikthåndtering i helsevesenet

– Gratis helsekurs på nett fra Medkurs.no Innledning: Hvorfor er voldsforebygging og konflikthåndtering i helsevesenet viktig? er av stor betydning, både for pasienter og helsepersonell.

Akkuttmedisin og gjenopplivning av voksne: Livreddende tiltak og prosedyrer

– Et gratis helsekurs på nett fra Medkurs.no Innledning: Hvorfor er akuttmedisin og gjenopplivning viktig for voksne? Akkuttmedisin er et spennende og utfordrende fagfelt som

Kommunikasjon og konflikthåndtering i helsesektoren

: Lær hvordan du kan forbedre samarbeidet på arbeidsplassen Medkurs.no – gratis helsekurs på nett Kommunikasjon og konflikthåndtering er avgjørende for alle som jobber i

Smertebehandling kurs: Lær effektive teknikker for smertelindring og forbedre pasientomsorgen

fra Medkurs.no Hos Medkurs.no tilbyr vi gratis helsekurs på nett for helsepersonell og andre interesserte, og ønsker med dette å gi deg en innføring i

Omsorg for personer med autisme kurs: En veiledning for å bli en effektiv omsorgsperson

Omsorg for personer med autisme kurs: Gratis online helsekurs fra Medkurs.no Lurer du på hvordan du kan bli en mer effektiv omsorgsperson for mennesker med

Palliativ omsorg og lindring kurs: Mestre ferdighetene for en bedre pasientomsorg

fra Medkurs.no – din kilde til gratis helsekurs på nett Medkurs.no tilbyr helsepersonell en rekke gratis kurs innen ulike medisinske emner, for å hjelpe deg

Kurs for bruk av tvang og makt etter lov om kommunale helse- og omsorgstjenester kap 9: Hvordan utarbeide vedtak

Kurs for bruk av tvang og makt etter lov om kommunale helse- og omsorgstjenester kap 9: Hvordan utarbeide vedtak og finne det rette kurset på

Demensomsorg kurs: Din vei til en bedre forståelse og støtte for personer med demens hos Medkurs.no

Introduksjon til demensomsorg kurs Demens er en samlebetegnelse for ulike sykdommer som påvirker hjernen og gjør det vanskelig for en person å utføre hverdagslige oppgaver.

Kurs i mestringsstrategier mot aggresjon og vold

fra Medkurs.no Hos Medkurs.no tilbyr vi en rekke gratis helsekurs på nett for å styrke din evne til å takle ulike utfordringer i hverdagen. Vårt

Grunnkurs i legemiddelhåndtering og medikamentregning for vernepleiere

– Et gratis nettkurs fra Medkurs.no Er du en vernepleier som ønsker å styrke dine kunnskaper innen legemiddelhåndtering og medikamentregning? Da er dette gratis nettkurset

Psykisk helse og rusomsorg kurs: Veien til bedre forståelse og støtte

Psykisk helse og rusomsorg kurs: Veien til bedre forståelse og støtte fra Medkurs.no – gratis helsekurs på nett Innledning: Hva er Psykisk helse og rusomsorg

Grunnkurs i legemiddelhåndtering og medikamentregning for sykepleiere

Introduksjon til Sykepleiere spiller en avgjørende rolle i behandling og omsorg for pasienter, og en viktig del av dette er legemiddelhåndtering og medikamentregning. I denne

Hygiene kurs: Lær de viktigste prinsippene og metoder for en ren og trygg arbeidsplass

Velkommen til Medkurs.no! Hos Medkurs.no tilbyr vi gratis helsekurs på nett, og i dag ønsker vi å presentere et av våre mest nyttige og relevante

Kurs i reglene for bruk av tvang basert på pasient- og brukerrettighetsloven Kapittel 4A: En guide til bedre forståelse og praksis

Kurs i reglene for bruk av tvang basert på pasient- og brukerrettighetsloven Kapittel 4A: Gratis helsekurs på nett fra Medkurs.no Introduksjon: Bli kjent med reglene

Legemiddelhåndtering kurs – alt du trenger å vite for riktig medisinering og pasientsikkerhet

Introduksjon: Ta kontroll over medisineringen med Legemiddelhåndtering kurs Velkommen til Medkurs.no, din plattform for gratis helsekurs på nett. Selv om kursene våre ikke er godkjente

HLR-kurs: Lær livreddende ferdigheter og gjør en forskjell

med Medkurs.no Du har kanskje hørt om HLR-kurs og hvor viktig det er å ha kjennskap til livreddende førstehjelp. På Medkurs.no tilbyr vi gratis helsekurs

Miljøarbeider kurs: En veiledning for å kickstarte din karriere innen miljøarbeid

fra Medkurs.no Introduksjon: Hva er en miljøarbeider, og hvorfor bør du vurdere en karriere innen miljøarbeid? Etter hvert som miljøkriser og bærekraft blir stadig mer

Førstehjelpkurs til barn: Lær å redde liv og forhindre skader i en nødsituasjon

Førstehjelpkurs til barn: Lær å redde liv og forhindre skader i en nødsituasjon Barneulykker og skader er svært vanlige. Det er viktig å lære seg

Basiskurs i smittevern og hygiene

fra Medkurs.no Hvis du er interessert i å lære mer om smittevern og hygiene, har Medkurs.no et flott utvalg av gratis helsekurs på nett, spesielt

Kurs i grunnleggende legemiddelhåndtering

Legemiddelhåndtering: – Lær grunnleggende ferdigheter i legemiddelhåndtering hos Medkurs.no Innledning: Hvorfor er legemiddelhåndtering viktig? Legemiddelhåndtering er en kritisk prosess i helsesektoren, og grunnleggende opplæring innenfor

Førstehjelpkurs: Lær å redde liv og håndtere nødsituasjoner effektivt

med Medkurs.no Det å kunne yte førstehjelp er en uvurderlig ferdighet som kan redde liv og minimere skader i alvorlige nødsituasjoner. Medkurs.no tilbyr gratis helsekurs

Førstehjelpkurs til voksne: Lær livsviktige ferdigheter for å håndtere nødsituasjoner

Innledning: Betydningen av førstehjelp for voksne Førstehjelpkurs til voksne er essensielle for å kunne takle de nødsituasjoner som kan oppstå i hverdagen. Å vite hvordan

Smittevernkurs: Alt du trenger å vite for å beskytte deg og andre

– et gratis nettbasert kurs fra Medkurs.no Hos Medkurs.no er vi stolte av å tilby gratis helsekurs på nett, inkludert vårt populære smittevernkurs. Vårt mål

Samtykkekompetanse kurs helse: En guide for helsepersonell

Samtykkekompetanse kurs helse: En gratis online guide fra Medkurs.no Innledning: Hvorfor samtykkekompetanse er viktig i helsevesenet Samtykkekompetanse er en kritisk ferdighet for helsepersonell i deres

Ernæringskurs: Lær hvordan du kan forbedre helsen din gjennom riktig kosthold

– En introduksjon til gratis helsekurs på nett fra Medkurs.no Ernæring har en stor innvirkning på hvordan vi føler oss, hvordan vi fungerer i hverdagen

Førstehjelpkurs til eldre: Lær livreddende ferdigheter og trygghet i nødssituasjoner

gjennom gratis helsekurs på nett Hos Medkurs.no tilbyr vi en rekke gratis helsekurs, inkludert Førstehjelpkurs til eldre. Det er viktig å understreke at disse kursene

GDPR-kurs helse: Et viktig skritt for å beskytte pasienters personvern

GDPR-kurs helse: Hvorfor det er viktig å beskytte pasienters personvern I denne artikkelen vil vi utforske betydningen av GDPR i helsesektoren og hvorfor det kan

Epilepsi påbygning kurs: Utvid din kunnskap og forståelse

Epilepsi påbygning kurs hos Medkurs.no: Utvid din kunnskap og forståelse Epilepsi er en nevrologisk tilstand som påvirker rundt 50 millioner mennesker over hele verden. Å

Introduksjonskurs til jobb i primærhelsetjenesten

: Din vei inn i en meningsfull karriere Med gratis kurs fra Medkurs.no Har du en drøm om å jobbe innen helsevesenet, men er usikker

Fagtemakurs helse – Styrk din kompetanse og gi bedre helsetjenester

Fagtemakurs helse – Styrk din kompetanse med gratis helsekurs på nett fra Medkurs.no Medkurs.no tilbyr gratis helsekurs på nett for deg som ønsker større bevissthet

BPA-kurs for personlige assistenter: En veiledning til profesjonell og personlig vekst

fra Medkurs.no Introduksjon: Hva er BPA og betydningen av BPA-kurs for personlige assistenter Brukerstyrt personlig assistanse (BPA) er en ordning som gir personer med funksjonsnedsettelser

Epilepsikurs: Øk din kunnskap og styrk dine ferdigheter

– Medkurs.no Hos Medkurs.no tilbyr vi gratis helsekurs på nett for å hjelpe deg å lære mer om ulike helsemessige emner og tilstander, og epilepsikurs

Diabeteskurs: En komplett guide for effektiv diabetesbehandling og støtte

fra Medkurs.no Introduksjon til Diabeteskurs fra Medkurs.no Diabetes er en sykdom som kan påvirke mennesker i alle aldre og livssituasjoner. Det er to hovedtyper av

BPA – Grunnkurs i arbeidsledelse.

BPA – Grunnkurs i arbeidsledelse: En veiledning fra Medkurs.no Hos Medkurs.no er vi opptatt av å gi deg kvalitetsinformasjon og kunnskap som hjelper deg i

Brukerstyrt personlig assistanse – BPA

: En veiledning fra Medkurs.no Hos Medkurs.no tilbyr vi gratis helsekurs på nett for alle som ønsker å lære mer om forskjellige helserelaterte temaer. Våre

Hvordan kjøre økonomisk – Våre 7 tips

Lurer du på hvordan du kan kjøre økonomisk? Økonomisk kjøring er en måte å spare både miljøet og lommeboken på. Det handler om å kjøre

Hvordan bestå oppkjøring – Her er våre 7 tips

Å bestå oppkjøring kan være en utfordring for mange. Det kan være på grunn av stress eller at man har øvelseskjørt for lite. Men med

Engangskateterisering: Hva er det og hvordan gjør du det?

Hvis du har problemer med å tømme blæren på normal måte, kan engangskateterisering være en god løsning til dine vannlatingsproblemer. Engangskateterisering er en alternativ måte

Hvordan bli plastisk kirurg: en vei til å forme fremtiden

Plastisk kirurgi, en gren av medisinen som fokuserer på rekonstruksjon og estetikk, har fascinert menneskeheten i hundrevis av år. Fra gamle egyptiske parykker til dagens

5 måter markedsførere kan takle den utfordrende julehandelsesongen 2023

Som vi alle vet, er julehandelsesongen en av de viktigste tidene på året for markedsførere. Det er en tid da bedrifter kan få mest mulig

5 fordeler ved å selge på en B2B-markedsplass

B2B er forretningsmodellen der kommersiell utveksling skjer mellom selskaper. Finn ut nå hva den består av og hvilke fordeler den har. Dette er absolutt ikke

Grått hår – omfavne eller behandle? Her er alt du trenger å vite om hvorfor du har grått hår.

Innlegget er sponset Når vi blir eldre, endrer håret vårt farge. For noen kan dette bety tråder av grått hår blandet inn med deres naturlige